mirror of

https://github.com/sourcegraph/sourcegraph.git

synced 2026-02-06 13:31:54 +00:00

Docs: Replace hyphens in text with em-dashes (#42367)

This commit is contained in:

parent

a4eaada3ed

commit

68ed0abf80

12

CHANGELOG.md

12

CHANGELOG.md

@ -383,7 +383,7 @@ All notable changes to Sourcegraph are documented in this file.

|

||||

- Search query inputs are now backed by the CodeMirror library instead of Monaco. Monaco can be re-enabled by setting `experimentalFeatures.editor` to `"monaco"`. [38584](https://github.com/sourcegraph/sourcegraph/pull/38584)

|

||||

- Better search-based code navigation for Python using tree-sitter [#38459](https://github.com/sourcegraph/sourcegraph/pull/38459)

|

||||

- Gitserver endpoint access logs can now be enabled by adding `"log": { "gitserver.accessLogs": true }` to the site config. [#38798](https://github.com/sourcegraph/sourcegraph/pull/38798)

|

||||

- Code Insights supports a new type of insight - compute-powered insight, currently under the experimental feature flag: `codeInsightsCompute` [#37857](https://github.com/sourcegraph/sourcegraph/issues/37857)

|

||||

- Code Insights supports a new type of insight—compute-powered insight, currently under the experimental feature flag: `codeInsightsCompute` [#37857](https://github.com/sourcegraph/sourcegraph/issues/37857)

|

||||

- Cache execution result when mounting files in a batch spec. [sourcegraph/src-cli#795](https://github.com/sourcegraph/src-cli/pull/795)

|

||||

- Batch Changes changesets open on archived repositories will now move into a [Read-Only state](https://docs.sourcegraph.com/batch_changes/references/faq#why-is-my-changeset-read-only). [#26820](https://github.com/sourcegraph/sourcegraph/issues/26820)

|

||||

|

||||

@ -394,7 +394,7 @@ All notable changes to Sourcegraph are documented in this file.

|

||||

- Rewrite resource estimator using the latest metrics [#37869](https://github.com/sourcegraph/sourcegraph/pull/37869)

|

||||

- Selecting a line multiple times in the file view will only add a single browser history entry [#38204](https://github.com/sourcegraph/sourcegraph/pull/38204)

|

||||

- The panels on the homepage (recent searches, etc) are now turned off by default. They can be re-enabled by setting `experimentalFeatures.showEnterpriseHomePanels` to true. [#38431](https://github.com/sourcegraph/sourcegraph/pull/38431)

|

||||

- Log sampling is now enabled by default for Sourcegraph components that use the [new internal logging library](https://github.com/sourcegraph/log) - the first 100 identical log entries per second will always be output, but thereafter only every 100th identical message will be output. It can be configured for each service using the environment variables `SRC_LOG_SAMPLING_INITIAL` and `SRC_LOG_SAMPLING_THEREAFTER`, and if `SRC_LOG_SAMPLING_INITIAL` is set to `0` or `-1` the sampling will be disabled entirely. [#38451](https://github.com/sourcegraph/sourcegraph/pull/38451)

|

||||

- Log sampling is now enabled by default for Sourcegraph components that use the [new internal logging library](https://github.com/sourcegraph/log)—the first 100 identical log entries per second will always be output, but thereafter only every 100th identical message will be output. It can be configured for each service using the environment variables `SRC_LOG_SAMPLING_INITIAL` and `SRC_LOG_SAMPLING_THEREAFTER`, and if `SRC_LOG_SAMPLING_INITIAL` is set to `0` or `-1` the sampling will be disabled entirely. [#38451](https://github.com/sourcegraph/sourcegraph/pull/38451)

|

||||

- Deprecated `experimentalFeatures.enableGitServerCommandExecFilter`. Setting this value has no effect on the code any longer and the code to guard against unknown commands is always enabled.

|

||||

- Zoekt now runs with GOGC=25 by default, helping to reduce the memory consumption of Sourcegraph. Previously it ran with GOGC=50, but we noticed a regression when we switched to go 1.18 which contained significant changes to the go garbage collector. [#38708](https://github.com/sourcegraph/sourcegraph/issues/38708)

|

||||

- Hide `Publish` action when working with imported changesets. [#37882](https://github.com/sourcegraph/sourcegraph/issues/37882)

|

||||

@ -1000,7 +1000,7 @@ All notable changes to Sourcegraph are documented in this file.

|

||||

- [`deploy-sourcegraph` overlays](https://docs.sourcegraph.com/admin/install/kubernetes/configure#overlays) now use `resources:` instead of the [deprecated `bases:` field](https://kubectl.docs.kubernetes.io/references/kustomize/kustomization/bases/) for referencing Kustomize bases. [deploy-sourcegraph#3606](https://github.com/sourcegraph/deploy-sourcegraph/pull/3606)

|

||||

- The `deploy-sourcegraph-docker` Pure Docker deployment scripts and configuration has been moved to the `./pure-docker` subdirectory. [deploy-sourcegraph-docker#454](https://github.com/sourcegraph/deploy-sourcegraph-docker/pull/454)

|

||||

- In Kubernetes deployments, setting the `SRC_GIT_SERVERS` environment variable explicitly is no longer needed. Addresses of the gitserver pods will be discovered automatically and in the same numerical order as with the static list. Unset the env var in your `frontend.Deployment.yaml` to make use of this feature. [#24094](https://github.com/sourcegraph/sourcegraph/pull/24094)

|

||||

- The consistent hashing scheme used to distribute repositories across indexed-search replicas has changed to improve distribution and reduce load discrepancies. In the next upgrade, indexed-search pods will re-index the majority of repositories since the repo to replica assignments will change. This can take a few hours in large instances, but searches should succeed during that time since a replica will only delete a repo once it has been indexed in the new replica that owns it. You can monitor this process in the Zoekt Index Server Grafana dashboard - the "assigned" repos in "Total number of repos" will spike and then reduce until it becomes the same as "indexed". As a fail-safe, the old consistent hashing scheme can be enabled by setting the `SRC_ENDPOINTS_CONSISTENT_HASH` env var to `consistent(crc32ieee)` in the `sourcegraph-frontend` deployment. [#23921](https://github.com/sourcegraph/sourcegraph/pull/23921)

|

||||

- The consistent hashing scheme used to distribute repositories across indexed-search replicas has changed to improve distribution and reduce load discrepancies. In the next upgrade, indexed-search pods will re-index the majority of repositories since the repo to replica assignments will change. This can take a few hours in large instances, but searches should succeed during that time since a replica will only delete a repo once it has been indexed in the new replica that owns it. You can monitor this process in the Zoekt Index Server Grafana dashboard—the "assigned" repos in "Total number of repos" will spike and then reduce until it becomes the same as "indexed". As a fail-safe, the old consistent hashing scheme can be enabled by setting the `SRC_ENDPOINTS_CONSISTENT_HASH` env var to `consistent(crc32ieee)` in the `sourcegraph-frontend` deployment. [#23921](https://github.com/sourcegraph/sourcegraph/pull/23921)

|

||||

- In Kubernetes deployments an emptyDir (`/dev/shm`) is now mounted in the `pgsql` deployment to allow Postgres to access more than 64KB shared memory. This value should be configured to match the `shared_buffers` value in your Postgres configuration. [deploy-sourcegraph#3784](https://github.com/sourcegraph/deploy-sourcegraph/pull/3784/)

|

||||

|

||||

### Fixed

|

||||

@ -1133,7 +1133,7 @@ API docs is a new experimental feature of Sourcegraph ([learn more](https://docs

|

||||

- Symbols in documentation now have distinct icons for e.g. functions/vars/consts/etc.

|

||||

- Symbols are now sorted in exported-first, alphabetical order.

|

||||

- Repositories without LSIF documentation data now show a friendly error page indicating what languages are supported, how to set it up, etc.

|

||||

- API docs can now distinguish between different types of symbols, tests, examples, benchmarks, etc. and whether symbols are public/private - to support filtering in the future.

|

||||

- API docs can now distinguish between different types of symbols, tests, examples, benchmarks, etc. and whether symbols are public/private—to support filtering in the future.

|

||||

- Only public/exported symbols are included by default for now.

|

||||

- URL paths for Go packages are now friendlier, e.g. `/-/docs/cmd/frontend/auth` instead of `/-/docs/cmd-frontend-auth`.

|

||||

- URLs are now formatted by the language indexer, in a way that makes sense for the language, e.g. `#Mocks.CreateUserAndSave` instead of `#ypeMocksCreateUserAndSave` for a Go method `CreateUserAndSave` on type `Mocks`.

|

||||

@ -1883,7 +1883,7 @@ API docs is a new experimental feature of Sourcegraph ([learn more](https://docs

|

||||

- The Site-admin > Pings page no longer incorrectly indicates that pings are disabled when they aren't. [#11229](https://github.com/sourcegraph/sourcegraph/pull/11229)

|

||||

- Match counts are now accurately reported for indexed search. [#11242](https://github.com/sourcegraph/sourcegraph/pull/11242)

|

||||

- When background permissions syncing is enabled, it is now possible to only enforce permissions for repositories from selected code hosts (instead of enforcing permissions for repositories from all code hosts). [#11336](https://github.com/sourcegraph/sourcegraph/pull/11336)

|

||||

- When more than 200+ repository revisions in a search are unindexed (very rare), the remaining repositories are reported as missing instead of Sourcegraph issuing e.g. several thousand unindexed search requests which causes system slowness and ultimately times out - ensuring searches are still fast even if there are indexing issues on a deployment of Sourcegraph. This does not apply if `index:no` is present in the query.

|

||||

- When more than 200+ repository revisions in a search are unindexed (very rare), the remaining repositories are reported as missing instead of Sourcegraph issuing e.g. several thousand unindexed search requests which causes system slowness and ultimately times out—ensuring searches are still fast even if there are indexing issues on a deployment of Sourcegraph. This does not apply if `index:no` is present in the query.

|

||||

|

||||

### Removed

|

||||

|

||||

@ -2244,7 +2244,7 @@ This is `3.12.8` release with internal infrastructure fixes to publish the docke

|

||||

|

||||

### Fixed

|

||||

|

||||

- Fixed an issue in `sourcegraph/*` Docker images where data folders were either not created or had incorrect permissions - preventing the use of Docker volumes. [#7991](https://github.com/sourcegraph/sourcegraph/pull/7991)

|

||||

- Fixed an issue in `sourcegraph/*` Docker images where data folders were either not created or had incorrect permissions—preventing the use of Docker volumes. [#7991](https://github.com/sourcegraph/sourcegraph/pull/7991)

|

||||

|

||||

## 3.12.2

|

||||

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

# SAML

|

||||

Security Assertion Markup Language (SAML) is a common web protocol used to pass authorized credentials between two web applications, a service provider (SP) - Sourcegraph in this instance and an Identity Provider (IdP). This communication is conducted via XML assertions.

|

||||

Security Assertion Markup Language (SAML) is a common web protocol used to pass authorized credentials between two web applications, a service provider (SP)—Sourcegraph in this instance and an Identity Provider (IdP). This communication is conducted via XML assertions.

|

||||

|

||||

## Identity Providers

|

||||

|

||||

@ -130,7 +130,7 @@ Use the following filters to restrict how users can create accounts and sign in

|

||||

|

||||

Special characters such as the `&` (ampersand) will be encoded in the XML document, the format used by SAML. For example, if you have a group `Dogs & cats` set in your Identity Provider, it will be shown as `Dogs & cats` in the XML assertions.

|

||||

|

||||

This is expected - just avoid using the encoded character when adding a group name to the `allowGroups` array.

|

||||

This is expected—just avoid using the encoded character when adding a group name to the `allowGroups` array.

|

||||

|

||||

_Instead of_

|

||||

```json

|

||||

|

||||

@ -38,7 +38,7 @@ We **strongly** recommend you fork the [Sourcegraph with Kubernetes reference re

|

||||

|

||||

<span class="virtual-br"></span>

|

||||

|

||||

> NOTE: We do not recommend storing secrets in the repository itself - instead, consider leveraging [Kubernetes's Secret objects](https://kubernetes.io/docs/concepts/configuration/secret).

|

||||

> NOTE: We do not recommend storing secrets in the repository itself—instead, consider leveraging [Kubernetes's Secret objects](https://kubernetes.io/docs/concepts/configuration/secret).

|

||||

|

||||

### Create a fork or private duplicate

|

||||

|

||||

|

||||

@ -26,7 +26,7 @@ Our Helm chart has a lot of sensible defaults baked into the values.yaml. Not on

|

||||

## High-level overview of how to use Helm with Sourcegraph

|

||||

|

||||

1. Prepare any required customizations

|

||||

- Most environments are likely to need changes from the defaults - use the guidance in [Configuration](#configuration).

|

||||

- Most environments are likely to need changes from the defaults—use the guidance in [Configuration](#configuration).

|

||||

1. Review the changes

|

||||

- There are [three mechanisms](#reviewing-changes) that can be used to review any customizations made, this is an optional step, but may be useful the first time you deploy Sourcegraph, for peace of mind.

|

||||

1. Select your deployment method and follow the guidance:

|

||||

@ -78,7 +78,7 @@ Providing the override file to Helm is done with the inclusion of the values fla

|

||||

```sh

|

||||

helm upgrade --install --values ./override.yaml --version 4.4.1 sourcegraph sourcegraph/sourcegraph

|

||||

```

|

||||

When making configuration changes, it's recommended to review the changes that will be applied - see [Reviewing Changes](#reviewing-changes).

|

||||

When making configuration changes, it's recommended to review the changes that will be applied—see [Reviewing Changes](#reviewing-changes).

|

||||

|

||||

### Specific Configuration Scenarios

|

||||

|

||||

@ -464,7 +464,7 @@ helm repo add sourcegraph https://helm.sourcegraph.com/release

|

||||

|

||||

#### Steps {#gke-steps}

|

||||

|

||||

**1** – Create your override file and add in any configuration override settings you need - see [configuration](#configuration) for more information on override files and the options for what can be configured.

|

||||

**1** – Create your override file and add in any configuration override settings you need—see [configuration](#configuration) for more information on override files and the options for what can be configured.

|

||||

|

||||

Add into your override file the below values to configure both your ingress hostname and your storage class. We recommend configuring Ingress to use [Container-native load balancing] to expose Sourcegraph publicly on a domain of your choosing and setting the Storage Class to use [Compute Engine persistent disk]. (For an example file see [override.yaml](https://github.com/sourcegraph/deploy-sourcegraph-helm/tree/main/charts/sourcegraph/examples/gcp/override.yaml))

|

||||

|

||||

@ -598,7 +598,7 @@ helm repo add sourcegraph https://helm.sourcegraph.com/release

|

||||

|

||||

#### Steps {#eks-steps}

|

||||

|

||||

**1** – Create your override file and add in any configuration override settings you need - see [configuration](#configuration) for more information on override files and the options around what can be configured.

|

||||

**1** – Create your override file and add in any configuration override settings you need—see [configuration](#configuration) for more information on override files and the options around what can be configured.

|

||||

|

||||

We recommend adding the following values into your override file to configure Ingress to use [AWS Load Balancer Controller] to expose Sourcegraph publicly on a domain of your choosing, and to configure the Storage Class to use [AWS EBS CSI driver]. For an example, see [override.yaml](https://github.com/sourcegraph/deploy-sourcegraph-helm/tree/main/charts/sourcegraph/examples/aws/override.yaml).

|

||||

|

||||

@ -683,7 +683,7 @@ helm repo add sourcegraph https://helm.sourcegraph.com/release

|

||||

|

||||

#### Steps {#aks-steps}

|

||||

|

||||

**1** – Create your override file and add in any configuration override settings you need - see [configuration](#configuration) for more information on override files and the options around what can be configured.

|

||||

**1** – Create your override file and add in any configuration override settings you need—see [configuration](#configuration) for more information on override files and the options around what can be configured.

|

||||

|

||||

Add into your override file the below values to configure both your ingress hostname and your storage class. We recommend configuring Ingress to use [Application Gateway](https://azure.microsoft.com/en-us/services/application-gateway) to expose Sourcegraph publicly on a domain of your choosing and Storage Class to use [Azure Disk CSI driver](https://docs.microsoft.com/en-us/azure/aks/azure-disk-csi). For an example see [override.yaml](https://github.com/sourcegraph/deploy-sourcegraph-helm/tree/main/charts/sourcegraph/examples/azure/override.yaml).

|

||||

|

||||

@ -769,7 +769,7 @@ helm repo add sourcegraph https://helm.sourcegraph.com/release

|

||||

|

||||

#### Steps {#others-steps}

|

||||

|

||||

**1** – Create your override file and add in any configuration override settings you need - see [configuration](#configuration) for more information on override files and the options around what can be configured.

|

||||

**1** – Create your override file and add in any configuration override settings you need—see [configuration](#configuration) for more information on override files and the options around what can be configured.

|

||||

|

||||

Read <https://kubernetes.io/docs/concepts/storage/storage-classes/> to configure the `storageClass.provisioner` and `storageClass.parameters` fields for your cloud provider or consult documentation of the storage solution in your on-prem environment.

|

||||

|

||||

@ -898,7 +898,7 @@ A [standard upgrade](../../updates/index.md#standard-upgrades) occurs between tw

|

||||

helm repo update sourcegraph

|

||||

```

|

||||

|

||||

1. (Optional) Review the changes that will be applied - see [Reviewing Changes](#reviewing-changes) for options.

|

||||

1. (Optional) Review the changes that will be applied—see [Reviewing Changes](#reviewing-changes) for options.

|

||||

|

||||

1. Install the new version:

|

||||

|

||||

|

||||

@ -7,7 +7,7 @@ Sourcegraph supports the use of [Kustomize](https://kustomize.io) to modify and

|

||||

|

||||

Some benefits of using Kustomize to generate manifests instead of modifying the base directly include:

|

||||

|

||||

- Reduce the odds of encountering a merge conflict when [updating Sourcegraph](update.md) - they allow you to separate your unique changes from the upstream base files Sourcegraph provides.

|

||||

- Reduce the odds of encountering a merge conflict when [updating Sourcegraph](update.md)—they allow you to separate your unique changes from the upstream base files Sourcegraph provides.

|

||||

- Better enable Sourcegraph to support you if you run into issues, because how your deployment varies from our defaults is encapsulated in a small set of files.

|

||||

|

||||

## Using Kustomize

|

||||

@ -73,7 +73,7 @@ kubectl apply --prune -l deploy=sourcegraph -f generated-cluster --recursive

|

||||

We recommend that you:

|

||||

|

||||

- [Update the `./overlay-generate-cluster` script](./operations.md#applying-manifests) to apply the generated manifests from the `generated-cluster` directory with something like the above snippet

|

||||

- Commit your overlays changes separately - see our [customization guide](#customizations) for more details.

|

||||

- Commit your overlays changes separately—see our [customization guide](#customizations) for more details.

|

||||

|

||||

You can now get started with using overlays:

|

||||

|

||||

|

||||

@ -78,7 +78,7 @@ To perform a multi-version upgrade on a Sourcegraph instance running on Kubernet

|

||||

- If using an external database, follow the [upgrading external PostgreSQL instances](../../postgres.md#upgrading-external-postgresql-instances) guide.

|

||||

- Otherwise, perform the following steps from the [upgrading internal Postgres instances](../../postgres.md#upgrading-internal-postgresql-instances) guide:

|

||||

1. It's assumed that your fork of `deploy-sourcegraph` is up to date with your instance's current version. Pull the upstream changes for `v3.27.0` and resolve any git merge conflicts. We need to temporarily boot the containers defined at this specific version to rewrite existing data to the new Postgres 12 format.

|

||||

1. Run `kubectl apply -l deploy=sourcegraph -f base/pgsql` to launch a new Postgres 12 container and rewrite the old Postgres 11 data. This may take a while, but streaming container logs should show progress. **NOTE**: The Postgres migration requires enough capacity in its attached volume to accommodate an additional copy of the data currently on disk. Resize the volume now if necessary - the container will fail to start if there is not enough free disk space.

|

||||

1. Run `kubectl apply -l deploy=sourcegraph -f base/pgsql` to launch a new Postgres 12 container and rewrite the old Postgres 11 data. This may take a while, but streaming container logs should show progress. **NOTE**: The Postgres migration requires enough capacity in its attached volume to accommodate an additional copy of the data currently on disk. Resize the volume now if necessary—the container will fail to start if there is not enough free disk space.

|

||||

1. Wait until the database container is accepting connections. Once ready, run the command `kubectl exec pgsql -- psql -U sg -c 'REINDEX database sg;'` issue a reindex command to Postgres to repair indexes that were silently invalidated by the previous data rewrite step. **If you skip this step**, then some data may become inaccessible under normal operation, the following steps are not guaranteed to work, and **data loss will occur**.

|

||||

1. Follow the same steps for the `codeintel-db`:

|

||||

- Run `kubectl apply -l deploy=sourcegraph -f base/codeintel-db` to launch Postgres 12.

|

||||

|

||||

@ -85,7 +85,7 @@ We suggest using an AWS Application Load Balancer (ALB) to manage HTTPS connecti

|

||||

Open the [AWS Certificate Manager console](https://console.aws.amazon.com/acm) to **Request a certificate**:

|

||||

|

||||

- **Domain names**: Fully qualified domain name: your domain

|

||||

- **Select validation method**: DNS validation - recommended

|

||||

- **Select validation method**: DNS validation—recommended

|

||||

|

||||

After the certificate has been created, you will need to attach the `CNAME name` and `CNAME values` to your DNS.

|

||||

|

||||

|

||||

@ -109,7 +109,7 @@ More advanced users can also refer to [our FAQ item about custom consumption of

|

||||

|

||||

### Can I consume Sourcegraph's metrics in my own monitoring system (Datadog, New Relic, etc.)?

|

||||

|

||||

Sourcegraph provides [high-level alerting metrics](./observability/metrics.md#high-level-alerting-metrics) which you can integrate into your own monitoring system - see the [alerting custom consumption guide](./observability/alerting_custom_consumption.md) for more details.

|

||||

Sourcegraph provides [high-level alerting metrics](./observability/metrics.md#high-level-alerting-metrics) which you can integrate into your own monitoring system—see the [alerting custom consumption guide](./observability/alerting_custom_consumption.md) for more details.

|

||||

|

||||

While it is technically possible to consume all of Sourcegraph's metrics in an external system, our recommendation is to utilize the builtin monitoring tools and configure Sourcegraph to [send alerts to your own PagerDuty, Slack, email, etc.](./observability/alerting.md). Metrics and thresholds can change with each release, therefore manually defining the alerts required to monitor Sourcegraph's health is not recommended. Sourcegraph automatically updates the dashboards and alerts on each release to ensure the displayed information is up-to-date.

|

||||

|

||||

|

||||

@ -189,7 +189,7 @@ upto \

|

||||

|

||||

### downto

|

||||

|

||||

The `downto` command revert any applied migrations that are children of the given targets - this effectively "resets" the schema to the target version.

|

||||

The `downto` command revert any applied migrations that are children of the given targets—this effectively "resets" the schema to the target version.

|

||||

|

||||

```

|

||||

downto \

|

||||

|

||||

@ -2,7 +2,7 @@

|

||||

|

||||

> NOTE: The following applies only users that use our built-in databases. Users that use external databases (e.x: Amazon RDS, Google Cloud SQL, etc.) are not affected, and can ignore this page.

|

||||

|

||||

In Sourcegraph 3.31.x, both the **built-in** main Postgres (`pgsql`) and codeintel (`codeintel-db`) databases have switched to an Alpine-based Docker image - this has been done to resolve vulnerabilities found in Debian but not Alpine. Upon upgrading, Sourcegraph will need to re-index the entire database. This process requires some preparation, so please read through **all** of the instructions on the rest of the page beforehand.

|

||||

In Sourcegraph 3.31.x, both the **built-in** main Postgres (`pgsql`) and codeintel (`codeintel-db`) databases have switched to an Alpine-based Docker image—this has been done to resolve vulnerabilities found in Debian but not Alpine. Upon upgrading, Sourcegraph will need to re-index the entire database. This process requires some preparation, so please read through **all** of the instructions on the rest of the page beforehand.

|

||||

|

||||

For customers who previously upgraded to 3.27, there is a possibility that upgrade inadvertently introduced a major glibc change. This may have caused corruption in some indexes. While this is unlikely to have been noticeable up til now, upgrading to 3.31 (or more specifically the subsequent reindexing) will fail unless certain steps are taken before hand. Customers who have installed fresh from 3.27 or later should be unaffected by this potential data corruption, but are still likely to see the delay caused by the reindexing following upgrade.

|

||||

|

||||

|

||||

@ -46,7 +46,7 @@ The following sections guide you through the migration process.

|

||||

|

||||

### Deploying Sourcegraph

|

||||

|

||||

We offer several methods for deploying Sourcegraph for various requirements - see [Getting started](../../index.md#getting-started) to learn more about how to get started with Sourcegraph.

|

||||

We offer several methods for deploying Sourcegraph for various requirements—see [Getting started](../../index.md#getting-started) to learn more about how to get started with Sourcegraph.

|

||||

|

||||

Choose a deployment option and follow the instructions. When you've signed into your Sourcegraph instance as a site admin, continue to the next section.

|

||||

|

||||

|

||||

@ -2355,7 +2355,7 @@ Generated query for critical alert: `min((pg_up) <= 0)`

|

||||

|

||||

**Next steps**

|

||||

|

||||

- Drop and re-create the invalid trigger - please contact Sourcegraph to supply the trigger definition.

|

||||

- Drop and re-create the invalid trigger—please contact Sourcegraph to supply the trigger definition.

|

||||

- More help interpreting this metric is available in the [dashboards reference](./dashboards.md#postgres-invalid-indexes).

|

||||

- **Silence this alert:** If you are aware of this alert and want to silence notifications for it, add the following to your site configuration and set a reminder to re-evaluate the alert:

|

||||

|

||||

@ -2417,7 +2417,7 @@ Generated query for warning alert: `max((pg_exporter_last_scrape_error) >= 1)`

|

||||

|

||||

**Next steps**

|

||||

|

||||

- The database migration has been in progress for 5 or more minutes - please contact Sourcegraph if this persists.

|

||||

- The database migration has been in progress for 5 or more minutes—please contact Sourcegraph if this persists.

|

||||

- More help interpreting this metric is available in the [dashboards reference](./dashboards.md#postgres-migration-in-progress).

|

||||

- **Silence this alert:** If you are aware of this alert and want to silence notifications for it, add the following to your site configuration and set a reminder to re-evaluate the alert:

|

||||

|

||||

|

||||

@ -15,7 +15,7 @@ Site admins can view the Grafana monitoring dashboards on a Sourcegraph instance

|

||||

|

||||

A complete [dashboard reference](dashboards.md) is available for more context on our available service dashboards and panels.

|

||||

|

||||

Additional dashboards can also be set up - see [Grafana configuration](#grafana-configuration) for more details.

|

||||

Additional dashboards can also be set up—see [Grafana configuration](#grafana-configuration) for more details.

|

||||

|

||||

#### View documentation

|

||||

|

||||

|

||||

@ -2,7 +2,7 @@

|

||||

|

||||

<span class="badge badge-note">Sourcegraph 4.0+</span>

|

||||

|

||||

> WARNING: Sourcegraph is actively working on implementing [OpenTelemetry](https://opentelemetry.io/) for all observability data. **The first - and currently only - [signal](https://opentelemetry.io/docs/concepts/signals/) to be fully integrated is [tracing](./tracing.md)**.

|

||||

> WARNING: Sourcegraph is actively working on implementing [OpenTelemetry](https://opentelemetry.io/) for all observability data. **The first—and currently only—[signal](https://opentelemetry.io/docs/concepts/signals/) to be fully integrated is [tracing](./tracing.md)**.

|

||||

|

||||

Sourcegraph exports OpenTelemetry data to a bundled [OpenTelemetry Collector](https://opentelemetry.io/docs/collector/) instance.

|

||||

This service can be configured to ingest, process, and then export observability data to an observability backend of choice.

|

||||

@ -11,7 +11,7 @@ This approach offers a great deal of flexibility.

|

||||

## Configuration

|

||||

|

||||

Sourcegraph's OpenTelemetry Collector is deployed with a [custom image, `sourcegraph/opentelemetry-collector`](https://sourcegraph.com/github.com/sourcegraph/sourcegraph/-/tree/docker-images/opentelemetry-collector), and is configured with a configuration YAML file.

|

||||

By default, `sourcegraph/opentelemetry-collector` is configured to not do anything with the data it receives, but [exporters to various backends](#exporters) can be configured for each signal we currently support - **currently, only [traces data](#tracing) is supported**.

|

||||

By default, `sourcegraph/opentelemetry-collector` is configured to not do anything with the data it receives, but [exporters to various backends](#exporters) can be configured for each signal we currently support—**currently, only [traces data](#tracing) is supported**.

|

||||

|

||||

Refer to the [documentation](https://opentelemetry.io/docs/collector/configuration/) for an in-depth explanation of the parts that compose a full collector pipeline.

|

||||

|

||||

@ -34,7 +34,7 @@ To learn more about Sourcegraph traces in general, refer to our [tracing documen

|

||||

|

||||

> NOTE: In case you require an additional exporter from the [`opentelemetry-collector-contrib` repository](https://github.com/open-telemetry/opentelemetry-collector-contrib/tree/main/exporter), please [open an issue](https://github.com/sourcegraph/sourcegraph/issues).

|

||||

|

||||

Basic configuration for each tracing backend type is described below. Note that just adding a backend to the `exporters` block does not enable it - it must also be added to the `service` block.

|

||||

Basic configuration for each tracing backend type is described below. Note that just adding a backend to the `exporters` block does not enable it—it must also be added to the `service` block.

|

||||

Refer to the next snippet for a basic but complete example, which is the [default out-of-the-box configuration](https://sourcegraph.com/github.com/sourcegraph/sourcegraph/-/blob/docker-images/opentelemetry-collector/configs/logging.yaml):

|

||||

|

||||

```yaml

|

||||

@ -169,7 +169,7 @@ service:

|

||||

Exporters send observability data from OpenTelemetry collector to desired backends.

|

||||

Each exporter can support one, or several, OpenTelemetry signals.

|

||||

|

||||

This section outlines some common configurations for exporters - for more details, refer to the [official OpenTelemetry exporters documentation](https://opentelemetry.io/docs/collector/configuration/#exporters).

|

||||

This section outlines some common configurations for exporters—for more details, refer to the [official OpenTelemetry exporters documentation](https://opentelemetry.io/docs/collector/configuration/#exporters).

|

||||

|

||||

> NOTE: In case you require an additional exporter from the [`opentelemetry-collector-contrib` repository](https://github.com/open-telemetry/opentelemetry-collector-contrib/tree/main/exporter), please [open an issue](https://github.com/sourcegraph/sourcegraph/issues).

|

||||

|

||||

@ -209,14 +209,14 @@ exporters:

|

||||

|

||||

Refer to the [`jaeger` exporter documentation](https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/exporter/jaegerexporter/README.md) for all options.

|

||||

|

||||

Most Sourcegraph deployment methods still ship with an opt-in Jaeger instance - to set this up, follow the relevant deployment guides, which will also set up the appropriate configuration for you:

|

||||

Most Sourcegraph deployment methods still ship with an opt-in Jaeger instance—to set this up, follow the relevant deployment guides, which will also set up the appropriate configuration for you:

|

||||

|

||||

- [Kubernetes (with Helm)](../deploy/kubernetes/helm.md#enable-the-bundled-jaeger-deployment)

|

||||

- [Kubernetes (without Helm)](../deploy/kubernetes/configure.md#enable-the-bundled-jaeger-deployment)

|

||||

- [Docker Compose](../deploy/docker-compose/operations.md#enable-the-bundled-jaeger-deployment)

|

||||

|

||||

If you wish to do additional configuration or connect to your own Jaeger instance, the deployed Collector image is bundled with a [basic configuration with Jaeger exporting](https://sourcegraph.com/github.com/sourcegraph/sourcegraph/-/blob/docker-images/opentelemetry-collector/configs/jaeger.yaml).

|

||||

If this configuration serves your needs, you do not have to provide a separate config - the Collector startup command can be set to `/bin/otelcol-sourcegraph --config=/etc/otel-collector/configs/jaeger.yaml`. Note that this requires the environment variable `$JAEGER_HOST` to be set on the Collector instance (i.e. the container in Kubernetes or Docker Compose):

|

||||

If this configuration serves your needs, you do not have to provide a separate config—the Collector startup command can be set to `/bin/otelcol-sourcegraph --config=/etc/otel-collector/configs/jaeger.yaml`. Note that this requires the environment variable `$JAEGER_HOST` to be set on the Collector instance (i.e. the container in Kubernetes or Docker Compose):

|

||||

|

||||

```yaml

|

||||

exporters:

|

||||

|

||||

@ -9,7 +9,7 @@ There are currently three modes:

|

||||

|

||||

`"selective"` is the recommended default, because collecting traces on all requests can be quite memory- and network-intensive.

|

||||

If you have a large Sourcegraph instance (e.g,. more than 10k repositories), turn this on with caution.

|

||||

Note that the policies above are implemented at an application level - to sample all traces, please configure your tracing backend directly.

|

||||

Note that the policies above are implemented at an application level—to sample all traces, please configure your tracing backend directly.

|

||||

|

||||

We support the following tracing backend types:

|

||||

|

||||

@ -84,7 +84,7 @@ There are two ways to export traces to Jaeger:

|

||||

1. **Recommended:** Configuring the [OpenTelemetry Collector](opentelemetry.md) (`"type": "opentelemetry"` in `observability.tracing`) to [send traces to a Jaeger instance](opentelemetry.md#jaeger).

|

||||

2. Using the legacy `"type": "jaeger"` configuration in `observability.tracing` to send spans directly to Jaeger.

|

||||

|

||||

We strongly recommend using option 1 to use Jaeger, which is supported via opt-in mechanisms for each of our core deployment methods - to learn more, refer to the [Jaeger exporter documentation](opentelemetry.md#jaeger).

|

||||

We strongly recommend using option 1 to use Jaeger, which is supported via opt-in mechanisms for each of our core deployment methods—to learn more, refer to the [Jaeger exporter documentation](opentelemetry.md#jaeger).

|

||||

|

||||

To use option 2 instead, which enables behaviour similar to how Sourcegraph exported traces before Sourcegraph 4.0, [Jaeger client environment variables](https://github.com/jaegertracing/jaeger-client-go#environment-variables) must be set on all services for traces to export to Jaeger correctly using `"observability.tracing": { "type": "jaeger" }`.

|

||||

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

# Using Perforce depots with Sourcegraph

|

||||

|

||||

Sourcegraph supports [Perforce](https://perforce.com) depots using the [git p4](https://git-scm.com/docs/git-p4) adapter or [p4-fusion](https://github.com/salesforce/p4-fusion). This creates an equivalent Git repository from a Perforce depot. An experimental feature can be enabled to [configure Perforce depots through the Sourcegraph UI](#add-a-perforce-code-host). For Sourcegraph <3.25.1, Sourcegraph's tool for serving local directories is required - see [adding depots using `src serve-git`](#add-perforce-depos-using-src-serve-git).

|

||||

Sourcegraph supports [Perforce](https://perforce.com) depots using the [git p4](https://git-scm.com/docs/git-p4) adapter or [p4-fusion](https://github.com/salesforce/p4-fusion). This creates an equivalent Git repository from a Perforce depot. An experimental feature can be enabled to [configure Perforce depots through the Sourcegraph UI](#add-a-perforce-code-host). For Sourcegraph <3.25.1, Sourcegraph's tool for serving local directories is required—see [adding depots using `src serve-git`](#add-perforce-depos-using-src-serve-git).

|

||||

|

||||

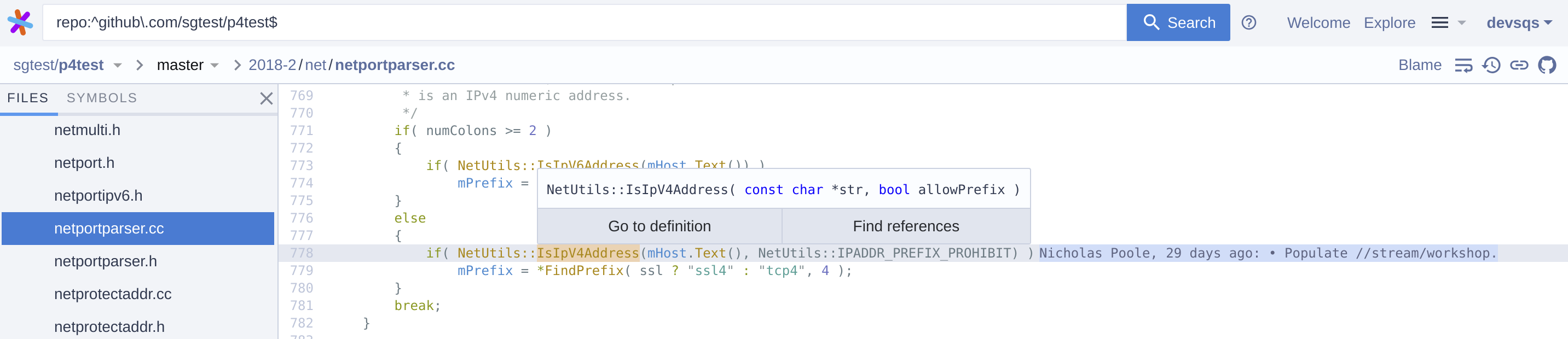

Screenshot of using Sourcegraph for code navigation in a Perforce depot:

|

||||

|

||||

|

||||

|

||||

> NOTE: Perforce support is a work in progress - see [known issues and limitations](#known-issues-and-limitations).

|

||||

> NOTE: Perforce support is a work in progress—see [known issues and limitations](#known-issues-and-limitations).

|

||||

|

||||

## Add a Perforce code host

|

||||

|

||||

|

||||

@ -294,7 +294,7 @@ Sourcegraph's background permissions syncing is a 2-way sync that combines data

|

||||

|

||||

Both types of sync happen [repeatedly and continuously based on a variety of events and criteria](#permissions-sync-scheduling).

|

||||

|

||||

> NOTE: Failure cases for each type of sync is generally gracefully handled - unless the code host returns a non-error response, the result is not used to update permissions. This means that permissions may become outdated, but will usually not be deleted, if syncs fail.

|

||||

> NOTE: Failure cases for each type of sync is generally gracefully handled—unless the code host returns a non-error response, the result is not used to update permissions. This means that permissions may become outdated, but will usually not be deleted, if syncs fail.

|

||||

|

||||

Background permissions syncing enables:

|

||||

|

||||

@ -311,7 +311,7 @@ The two types of sync, [user-centric and repository-centric](#background-permiss

|

||||

- **Complete sync** means a user has completed user-centric permissions sync (or a repository has completed a repository-centric sync), which indicates the most accurate permissions from the code host has been persisted to Sourcegraph for the user (or vice versa for repositories).

|

||||

- **Incremental sync** means a user has *not* yet completed a recent user-centric permissions sync, but has been recently granted some permissions from a repository-centric sync (or vice versa for repositories).

|

||||

- For example, if a user has *not* had a user-centric permissions sync, but has been granted permissions from one or more repository-centric syncs, the user will have only completed an incremental sync. In this state, a user might not have access to all repositories they should have access to, but will incrementally receive more access as repository-centric syncs complete.

|

||||

- It is possible to be in an incremental sync state where a user or repository has effectively completed a complete sync, and all access rules are aligned with what is in the code host - for example, if a user completed a complete sync and a single repository is added, the user will be granted access to that repository through incremental sync, so the user will have full access to everything the user should have access to despite being in an incremental sync state.

|

||||

- It is possible to be in an incremental sync state where a user or repository has effectively completed a complete sync, and all access rules are aligned with what is in the code host—for example, if a user completed a complete sync and a single repository is added, the user will be granted access to that repository through incremental sync, so the user will have full access to everything the user should have access to despite being in an incremental sync state.

|

||||

|

||||

The state of permissions is [repeatedly and continuously updated in the background](#permissions-sync-scheduling).

|

||||

|

||||

@ -355,7 +355,7 @@ For example, permissions syncs may be scheduled:

|

||||

- When a relevant [webhook is configured and received](#triggering-syncs-with-webhooks)

|

||||

- When a [manual sync is scheduled](#manually-scheduling-a-sync)

|

||||

|

||||

When a sync is scheduled, it is added to a queue that is steadily processed to avoid overloading the code host - a sync [might not happen immediately](#permissions-sync-duration). Prioritization of permissions sync also happens to, for example, ensure users or repositories with no permissions get processed first.

|

||||

When a sync is scheduled, it is added to a queue that is steadily processed to avoid overloading the code host—a sync [might not happen immediately](#permissions-sync-duration). Prioritization of permissions sync also happens to, for example, ensure users or repositories with no permissions get processed first.

|

||||

|

||||

There are variety of options in the site configuration to tune how the permissions sync requests are scheduled and processed:

|

||||

|

||||

@ -408,7 +408,7 @@ To further mitigate long sync times and API request load, Sourcegraph can also l

|

||||

|

||||

### Provider-specific optimizations

|

||||

|

||||

Each provider can implement optimizations to improve [sync performance](#permissions-sync-duration) and [up-to-dateness of permissions](#triggering-syncs-with-webhooks) - please refer to the relevant provider documentation on this page for more details.

|

||||

Each provider can implement optimizations to improve [sync performance](#permissions-sync-duration) and [up-to-dateness of permissions](#triggering-syncs-with-webhooks)—please refer to the relevant provider documentation on this page for more details.

|

||||

|

||||

#### Triggering syncs with webhooks

|

||||

|

||||

@ -663,4 +663,4 @@ To attach a user's Sourcegraph account to all relevant code host accounts, a spe

|

||||

3. On the sign in page, sign in again using the next code host's [authentication provider](../auth/index.md)

|

||||

4. Once repeated across all relevant code hosts, users should now have access to repositories on all code hosts and have all repository permissions enforced.

|

||||

|

||||

> NOTE: These steps are not required at every sign in - only during the initial account creation.

|

||||

> NOTE: These steps are not required at every sign in—only during the initial account creation.

|

||||

|

||||

@ -75,7 +75,7 @@ func DoSomethingAtomicOverTwoStores(ctx context.Context, store *MyStore, otherSt

|

||||

|

||||

The [`Store`](https://sourcegraph.com/github.com/sourcegraph/sourcegraph@v3.25.0/-/blob/internal/database/basestore/store.go#L37:6) struct defined in [github.com/sourcegraph/sourcegraph/internal/database/basestore](https://sourcegraph.com/github.com/sourcegraph/sourcegraph@v3.25.0/-/tree/internal/database/basestore) can be used to quickly bootstrap the base functionalities described above.

|

||||

|

||||

First, _embed_ a basestore pointer into your own store instance, as follows. Your store may need access to additional data for configuration or state - additional fields can be freely defined on this struct.

|

||||

First, _embed_ a basestore pointer into your own store instance, as follows. Your store may need access to additional data for configuration or state—additional fields can be freely defined on this struct.

|

||||

|

||||

```go

|

||||

import "github.com/sourcegraph/sourcegraph/internal/database/basestore"

|

||||

|

||||

@ -40,7 +40,7 @@ It starts in [`src-cli`](https://github.com/sourcegraph/src-cli):

|

||||

1. `src batch preview` starts [the "preview" command in `src-cli`](https://github.com/sourcegraph/src-cli/blob/6cbaba6d47761b5f5041ed285aea686bf5b266c3/cmd/src/batch_preview.go)

|

||||

1. That executes your batch spec, which means it [parses it, validates it, resolves the namespace, prepares the docker images, and checks which workspaces are required](https://github.com/sourcegraph/src-cli/blob/6cbaba6d47761b5f5041ed285aea686bf5b266c3/cmd/src/batch_common.go#L187:6)

|

||||

1. Then, for each repository (or [workspace in each repository](https://docs.sourcegraph.com/batch_changes/how-tos/creating_changesets_per_project_in_monorepos)), it [runs the `steps` in the batch spec](https://github.com/sourcegraph/src-cli/blob/6cbaba6d47761b5f5041ed285aea686bf5b266c3/internal/batches/run_steps.go#L54) by downloading a repository archive, creating a workspace in which to execute the `steps`, and then starting the Docker containers.

|

||||

1. If changes were produced in a repository, these changes are turned into a `ChangesetSpec` (a specification of what a changeset should look like on the code host - title, body, commit, etc.) and [uploaded to the Sourcegraph instance](https://github.com/sourcegraph/src-cli/blob/6cbaba6d47761b5f5041ed285aea686bf5b266c3/cmd/src/batch_common.go#L297-L324)

|

||||

1. If changes were produced in a repository, these changes are turned into a `ChangesetSpec` (a specification of what a changeset should look like on the code host—title, body, commit, etc.) and [uploaded to the Sourcegraph instance](https://github.com/sourcegraph/src-cli/blob/6cbaba6d47761b5f5041ed285aea686bf5b266c3/cmd/src/batch_common.go#L297-L324)

|

||||

1. `src batch preview`'s last step is then to [create a `BatchSpec` on the Sourcegraph instance](https://github.com/sourcegraph/src-cli/blob/6cbaba6d47761b5f5041ed285aea686bf5b266c3/cmd/src/batch_common.go#L331-L336), which is a collection of the `ChangesetSpec`s that you can then preview or apply

|

||||

|

||||

When you then click the "Preview the batch change" link that `src-cli` printed, you'll land on the preview page in the web frontend:

|

||||

|

||||

@ -92,7 +92,7 @@ We also run [separate vulnerability scans for our infrastructure](https://handbo

|

||||

|

||||

### Pipeline health

|

||||

|

||||

Maintaining [Buildkite pipeline](#buildkite-pipelines) health is a critical part of ensuring we ship a stable product - changes that make it to the `main` branch may be deployed to various Sourcegraph instances, and having a reliable and predictable pipeline is crucial to ensuring bugs do not make it to production environments.

|

||||

Maintaining [Buildkite pipeline](#buildkite-pipelines) health is a critical part of ensuring we ship a stable product—changes that make it to the `main` branch may be deployed to various Sourcegraph instances, and having a reliable and predictable pipeline is crucial to ensuring bugs do not make it to production environments.

|

||||

|

||||

To enable this, we [address flakes as they arise](#flakes) and mitigate the impacts of pipeline instability with [branch locks](#branch-locks).

|

||||

|

||||

@ -102,7 +102,7 @@ To enable this, we [address flakes as they arise](#flakes) and mitigate the impa

|

||||

|

||||

> WARNING: **A red `main` build is not okay and must be fixed.** Learn more about our `main` branch policy in [Testing principles: Failures on the `main` branch](../testing_principles.md#failures-on-the-main-branch).

|

||||

|

||||

[`buildchecker`](#buildchecker) is a tool responding to periods of consecutive build failures on the `main` branch Sourcegraph Buildkite pipeline. If it detects a series of failures on the `main` branch, merges to `main` will be restricted to members of the Sourcegraph team who authored the failing commits until the issue is resolved - this is referred to as a "branch lock". When a build passes on `main` again, `buildchecker` will automatically unlock the branch.

|

||||

[`buildchecker`](#buildchecker) is a tool responding to periods of consecutive build failures on the `main` branch Sourcegraph Buildkite pipeline. If it detects a series of failures on the `main` branch, merges to `main` will be restricted to members of the Sourcegraph team who authored the failing commits until the issue is resolved—this is referred to as a "branch lock". When a build passes on `main` again, `buildchecker` will automatically unlock the branch.

|

||||

|

||||

**Authors of the most recent failed builds are responsible for investigating failures.** Please refer to the [Continuous integration playbook](https://handbook.sourcegraph.com/departments/product-engineering/engineering/process/incidents/playbooks/ci#build-has-failed-on-the-main-branch) for step-by-step guides on what to do in various scenarios.

|

||||

|

||||

@ -110,7 +110,7 @@ To enable this, we [address flakes as they arise](#flakes) and mitigate the impa

|

||||

|

||||

A *flake* is defined as a test or script that is unreliable or non-deterministic, i.e. it exhibits both a passing and a failing result with the same code. In other words: something that sometimes fails, but if you retry it enough times, it passes, *eventually*.

|

||||

|

||||

Tests are not the only thing that are flaky - flakes can also encompass [sporadic infrastructure issues](#flaky-infrastructure) and [unreliable steps](#flaky-steps).

|

||||

Tests are not the only thing that are flaky—flakes can also encompass [sporadic infrastructure issues](#flaky-infrastructure) and [unreliable steps](#flaky-steps).

|

||||

|

||||

##### Flaky tests

|

||||

|

||||

@ -200,7 +200,7 @@ If you want to be explictly notified (through a Slack ping) when your pull reque

|

||||

|

||||

[](https://github.com/sourcegraph/sourcegraph/actions/workflows/buildchecker.yml) [](https://github.com/sourcegraph/sourcegraph/actions/workflows/buildchecker-history.yml)

|

||||

|

||||

[`buildchecker`](https://github.com/sourcegraph/sourcegraph/actions/workflows/buildchecker.yml), our [branch lock management tool](#branch-locks), runs in GitHub actions - see the [workflow specification](https://github.com/sourcegraph/sourcegraph/blob/main/.github/workflows/buildchecker.yml).

|

||||

[`buildchecker`](https://github.com/sourcegraph/sourcegraph/actions/workflows/buildchecker.yml), our [branch lock management tool](#branch-locks), runs in GitHub actions—see the [workflow specification](https://github.com/sourcegraph/sourcegraph/blob/main/.github/workflows/buildchecker.yml).

|

||||

|

||||

To learn more about `buildchecker`, refer to the [`buildchecker` source code and documentation](https://github.com/sourcegraph/sourcegraph/tree/main/dev/buildchecker).

|

||||

|

||||

@ -208,7 +208,7 @@ To learn more about `buildchecker`, refer to the [`buildchecker` source code and

|

||||

|

||||

[](https://github.com/sourcegraph/sourcegraph/actions/workflows/pr-auditor.yml)

|

||||

|

||||

[`pr-auditor`](https://github.com/sourcegraph/sourcegraph/actions/workflows/pr-auditor.yml), our [PR audit tool](../testing_principles.md#policy), runs in GitHub actions - see the [workflow specification](https://github.com/sourcegraph/sourcegraph/blob/main/.github/workflows/pr-auditor.yml).

|

||||

[`pr-auditor`](https://github.com/sourcegraph/sourcegraph/actions/workflows/pr-auditor.yml), our [PR audit tool](../testing_principles.md#policy), runs in GitHub actions—see the [workflow specification](https://github.com/sourcegraph/sourcegraph/blob/main/.github/workflows/pr-auditor.yml).

|

||||

|

||||

To learn more about `pr-auditor`, refer to the [`pr-auditor` source code and documentation](https://github.com/sourcegraph/sourcegraph/tree/main/dev/pr-auditor).

|

||||

|

||||

@ -236,6 +236,6 @@ The `license_finder` tool can be installed using `gem install license_finder`. Y

|

||||

LICENSE_CHECK=true ./dev/licenses.sh

|

||||

```

|

||||

|

||||

The `./dev/licenses.sh` script will also output some `license_finder` configuration for debugging purposes - this configuration is based on the `doc/dependency_decisions.yml` file, which tracks decisions made about licenses and dependencies.

|

||||

The `./dev/licenses.sh` script will also output some `license_finder` configuration for debugging purposes—this configuration is based on the `doc/dependency_decisions.yml` file, which tracks decisions made about licenses and dependencies.

|

||||

|

||||

For more details, refer to the [`license_finder` documentation](https://github.com/pivotal/LicenseFinder#usage).

|

||||

|

||||

@ -224,7 +224,7 @@ Insights can then be [created either via the locally running webapp](../../../co

|

||||

|

||||

## Debugging

|

||||

|

||||

This being a pretty complex, high cardinality, and slow-moving system - debugging can be tricky.

|

||||

This being a pretty complex, high cardinality, and slow-moving system—debugging can be tricky.

|

||||

|

||||

In this section, I'll cover useful tips I have for debugging the system when developing it or otherwise using it.

|

||||

|

||||

|

||||

@ -60,7 +60,7 @@ in [core/types/insights/index.ts](https://sourcegraph.com/github.com/sourcegraph

|

||||

## Insight configuration storing

|

||||

|

||||

To be able to fetch data for an insight, we need to store some insight configuration (input data), such as

|

||||

data series config - line color, search query string, the title of data series.

|

||||

data series config—line color, search query string, the title of data series.

|

||||

|

||||

We use the setting cascade to store these insight configurations. For example, Search based insight configuration looks

|

||||

something like this

|

||||

|

||||

@ -19,14 +19,14 @@ How relevant containers are identified from exported cAdvisor metrics is documen

|

||||

Because cAdvisor run on a *machine* and exports *container* metrics, standard strategies for identifying what container a metric belongs to (such as Prometheus scrape target labels) cannot be used, because all the metrics look like they belong to cAdvisor.

|

||||

Making things complicated is how containers are identified on various environments (namely Kubernetes and docker-compose) varies, sometimes due to characteristics of the environments and sometimes due to naming inconsistencies within Sourcegraph.

|

||||

Variations in how cAdvisor generates the `name` label it provides also makes things difficult (in some environments, it cannot generate one at all!).

|

||||

This means that cAdvisor can pick up non-Sourcegraph metrics, which can be problematic - see [known issues](#known-issues) for more details and current workarounds.

|

||||

This means that cAdvisor can pick up non-Sourcegraph metrics, which can be problematic—see [known issues](#known-issues) for more details and current workarounds.

|

||||

|

||||

## Available metrics

|

||||

|

||||

Exported metrics are documented in the [cAdvisor Prometheus metrics list](https://github.com/google/cadvisor/blob/master/docs/storage/prometheus.md#prometheus-container-metrics).

|

||||

In the list, the column `-disable_metrics parameter` indicates the "group" the metric belongs in.

|

||||

|

||||

Container runtime and deployment environment compatability for various metrics seem to be grouped by these groups - before using a metric, ensure that the metric is supported in all relevant environments (for example, both Docker and `containerd` container runtimes).

|

||||

Container runtime and deployment environment compatability for various metrics seem to be grouped by these groups—before using a metric, ensure that the metric is supported in all relevant environments (for example, both Docker and `containerd` container runtimes).

|

||||

Support is generally poorly documented, but a search through the [cAdvisor repository issues](https://github.com/google/cadvisor/issues) might provide some hints.

|

||||

|

||||

## Known issues

|

||||

|

||||

@ -22,7 +22,7 @@ These include:

|

||||

- [Alert solutions reference](https://docs.sourcegraph.com/admin/observability/alerts)

|

||||

- [Dashboards reference](https://docs.sourcegraph.com/admin/observability/dashboards)

|

||||

|

||||

Links to generated documentation can be provided in our other generated integrations - for example, [Slack alerts](https://docs.sourcegraph.com/admin/observability/alerting#setting-up-alerting) will provide a link to the appropriate alert solutions entry, and [Grafana panels](#grafana-integration) will link to the appropriate dashboards reference entry.

|

||||

Links to generated documentation can be provided in our other generated integrations—for example, [Slack alerts](https://docs.sourcegraph.com/admin/observability/alerting#setting-up-alerting) will provide a link to the appropriate alert solutions entry, and [Grafana panels](#grafana-integration) will link to the appropriate dashboards reference entry.

|

||||

|

||||

### Grafana integration

|

||||

|

||||

@ -39,7 +39,7 @@ It also takes care of the following:

|

||||

- Configuring annotation layers for alert events and version changes

|

||||

- Maintaining a uniform look and feel across all dashboards

|

||||

|

||||

Links to generated documentation can be provided in our other generated integrations - for example, [Slack alerts](https://docs.sourcegraph.com/admin/observability/alerting#setting-up-alerting) will provide a link to the appropriate service's dashboard.

|

||||

Links to generated documentation can be provided in our other generated integrations—for example, [Slack alerts](https://docs.sourcegraph.com/admin/observability/alerting#setting-up-alerting) will provide a link to the appropriate service's dashboard.

|

||||

|

||||

### Prometheus integration

|

||||

|

||||

|

||||

@ -32,7 +32,7 @@ To learn more about developing our observability stack, see the [local Sourcegra

|

||||

|

||||

The [Sourcegraph Prometheus image ships with Alertmanager](https://sourcegraph.com/search?q=repo:%5Egithub%5C.com/sourcegraph/sourcegraph%24+file:%5Edocker-images/prometheus/Dockerfile+FROM+prom/alertmanager&patternType=literal), which provides our [alerting capabilities](../../../admin/observability/alerting.md).

|

||||

|

||||

Note that [prom-wrapper](#prom-wrapper) uses a [fork of Alertmanager](https://github.com/sourcegraph/alertmanager) to better manipulate Alertmanager configuration - prom-wrapper needs to be able to write alertmanager configuration with secrets, etc, which the Alertmanager project is currently not planning on accepting changes for ([alertmanager#2316](https://github.com/prometheus/alertmanager/pull/2316)).

|

||||

Note that [prom-wrapper](#prom-wrapper) uses a [fork of Alertmanager](https://github.com/sourcegraph/alertmanager) to better manipulate Alertmanager configuration—prom-wrapper needs to be able to write alertmanager configuration with secrets, etc, which the Alertmanager project is currently not planning on accepting changes for ([alertmanager#2316](https://github.com/prometheus/alertmanager/pull/2316)).

|

||||

This *does not* affect the version of Alertmanager that we ship with, the fork exists purely for use as a library.

|

||||

|

||||

## Upgrading Prometheus or Alertmanager

|

||||

|

||||

@ -10,7 +10,7 @@ Out-of-band migrations allow for application-specific logic to exist in a migrat

|

||||

- fetching data from another remote API or data store based on existing data

|

||||

- transforming large scale data

|

||||

|

||||

Remember - the longer we block application startup on migrations, the more vulnerable an instance will become to downtime as no _new_ frontend containers will be able to service requests. In these cases, you should define an _out of band_ migration, which is run in the background of the application over time instead of at startup.

|

||||

Remember—the longer we block application startup on migrations, the more vulnerable an instance will become to downtime as no _new_ frontend containers will be able to service requests. In these cases, you should define an _out of band_ migration, which is run in the background of the application over time instead of at startup.

|

||||

|

||||

Some background tasks may seem initially well-suited for an out-of-band migration, but may actually be better installed as a permanent background job that runs periodically. Such jobs include data transformations that require external state to determine its progress. For example, database encryption jobs were originally written as out-of-band migrations. However, changing the external key in the site configuration can drop progress back to 0%, despite having already ran to completion.

|

||||

|

||||

|

||||

@ -23,7 +23,7 @@ See the [Quickstart guide](../setup/quickstart.md).

|

||||

|

||||

## Migrations

|

||||

|

||||

Migrations get applied automatically at application startup - you

|

||||

Migrations get applied automatically at application startup—you

|

||||

shouldn't need to run anything by hand. For full documentation see

|

||||

[migrations/README.md](https://github.com/sourcegraph/sourcegraph/blob/main/migrations/README.md)

|

||||

|

||||

|

||||

@ -134,7 +134,7 @@ Special care should be taken when reviewing a diff that contains, or is adjacent

|

||||

|

||||

### When is an in-depth review not required?

|

||||

|

||||

We do technically prevent PRs from being merged before being explicitly approved in order to meet security certification compliance requirements. However, there exist PRs that are appropriate to merge without waiting for a full review - in these cases, a reviewer may opt to give an approval without performing an in-depth review, and the author may opt to merge the PR without waiting for a reviewer to provide an in-depth review.

|

||||

We do technically prevent PRs from being merged before being explicitly approved in order to meet security certification compliance requirements. However, there exist PRs that are appropriate to merge without waiting for a full review—in these cases, a reviewer may opt to give an approval without performing an in-depth review, and the author may opt to merge the PR without waiting for a reviewer to provide an in-depth review.

|

||||

|

||||

> NOTE: When in doubt, reviewers should prefer to provide an in-depth review, and authors should prefer to wait for an in-depth review.

|

||||

|

||||

|

||||

@ -275,9 +275,9 @@ Flags:

|

||||

|

||||

Get logs from CI builds (e.g. to grep locally).

|

||||

|

||||

Get logs from CI builds, and output them in stdout or push them to Loki. By default only gets failed jobs - to change this, use the '--state' flag.

|

||||

Get logs from CI builds, and output them in stdout or push them to Loki. By default only gets failed jobs—to change this, use the '--state' flag.

|

||||

|

||||

The '--job' flag can be used to narrow down the logs returned - you can provide either the ID, or part of the name of the job you want to see logs for.

|

||||

The '--job' flag can be used to narrow down the logs returned—you can provide either the ID, or part of the name of the job you want to see logs for.

|

||||

|

||||

To send logs to a Loki instance, you can provide --out=http://127.0.0.1:3100 after spinning up an instance with 'sg run loki grafana'.

|

||||

From there, you can start exploring logs with the Grafana explore panel.

|

||||

@ -688,7 +688,7 @@ Flags:

|

||||

|

||||

### sg migration upto

|

||||

|

||||

Ensure a given migration has been applied - may apply dependency migrations.

|

||||

Ensure a given migration has been applied—may apply dependency migrations.

|

||||

|

||||

Available schemas:

|

||||

|

||||

@ -713,7 +713,7 @@ Flags:

|

||||

|

||||

### sg migration undo

|

||||

|

||||

Revert the last migration applied - useful in local development.

|

||||

Revert the last migration applied—useful in local development.

|

||||

|

||||

Available schemas:

|

||||

|

||||

@ -732,7 +732,7 @@ Flags:

|

||||

|

||||

### sg migration downto

|

||||

|

||||

Revert any applied migrations that are children of the given targets - this effectively "resets" the schema to the target version.

|

||||

Revert any applied migrations that are children of the given targets—this effectively "resets" the schema to the target version.

|

||||

|

||||

Available schemas:

|

||||

|

||||

@ -1038,7 +1038,7 @@ Also refer to the generated reference documentation available for site admins:

|

||||

|

||||

### sg monitoring generate

|

||||

|

||||

Generate monitoring assets - dashboards, alerts, and more.

|

||||

Generate monitoring assets—dashboards, alerts, and more.

|

||||

|

||||

```sh

|

||||

# Generate all monitoring with default configuration into a temporary directory

|

||||

@ -1442,7 +1442,7 @@ Flags:

|

||||

|

||||

## sg page

|

||||

|

||||

Page engineers at Sourcegraph - mostly used within scripts to automate paging alerts.

|

||||

Page engineers at Sourcegraph—mostly used within scripts to automate paging alerts.

|

||||

|

||||

```sh

|

||||

$ sg page --opsgenie.token [TOKEN] --message "something is broken" [my-schedule-on-ops-genie]

|

||||

|

||||

@ -56,7 +56,7 @@ _On the `3.X` branch:_

|

||||

|

||||

_On the `3.{X+1}` branch:_

|

||||

|

||||

1. Remove all writes to column `c1` as there are no more _exclusive_ readers of this column - all readers are able to read from column `c2` as well.

|

||||

1. Remove all writes to column `c1` as there are no more _exclusive_ readers of this column—all readers are able to read from column `c2` as well.

|

||||

1. Create a regular migration or an [out-of-band migration](../oobmigrations.md) that backfills values for column `c2` from column `c1`. Out-of-band migrations should be preferred for large or non-trivial migrations, and must be used if non-Postgres compute is required to convert values of the old format into the new format.

|

||||

|

||||

If using a regular migration, continue immediately. If using an out-of-band migration, mark it deprecated at some future version `3.{X+Y}` and wait for this version's branch cut; out-of-band migrations are not guaranteed to have completed until the underlying instance has been upgraded past the migration's deprecation version. This means there may exist yet-to-be-migrated rows with a value for `c1` but no value for column `c2` until this version.

|

||||

|

||||

@ -19,7 +19,7 @@ Engineers should budget an appropriate amount of time for ensuring [test plans](

|

||||

|

||||

**All pull requests must provide test plans** that indicate what has been done to test the changes being introduced. This can be done with a "Test plan" section within a pull request's description.

|

||||

|

||||

Some pull requests may not require a rigorous test plan - see [Exceptions](#exceptions).

|

||||

Some pull requests may not require a rigorous test plan—see [Exceptions](#exceptions).

|

||||

|

||||

## Types of tests

|

||||

|

||||

@ -63,7 +63,7 @@ Benefits:

|

||||

|

||||

- To the extent that fewer systems are under test compared to e2e tests, they are faster to run, easier to debug, have clearer ownership, and less vulnerable to [flakiness](#flaky-tests).

|

||||

- They only need to run on changes that touch code which could make the test fail, which makes CI faster and minimizes the impact of any [flakiness](#flaky-tests).

|

||||

- For UI behavior, they run in an actual browser - rather than a JSDOM environment.

|

||||

- For UI behavior, they run in an actual browser—rather than a JSDOM environment.

|

||||

|

||||

Tradeoffs:

|

||||

|

||||

@ -118,7 +118,7 @@ We use [Percy](https://percy.io/) to detect visual changes in Sourcegraph featur

|

||||

- Introduce adequate [observability measures](observability/index.md) so that issues can easily be detected and monitored.

|

||||

- Documentation can help ensure that changes are easy to understand if anything goes wrong, and should be added to [sources of truth](https://handbook.sourcegraph.com/company-info-and-process/communication#sources-of-truth).

|

||||

- If the documentation will be published to docs.sourcegraph.com, it can be tested by running `sg run docsite` and navigating to the corrected page.

|

||||